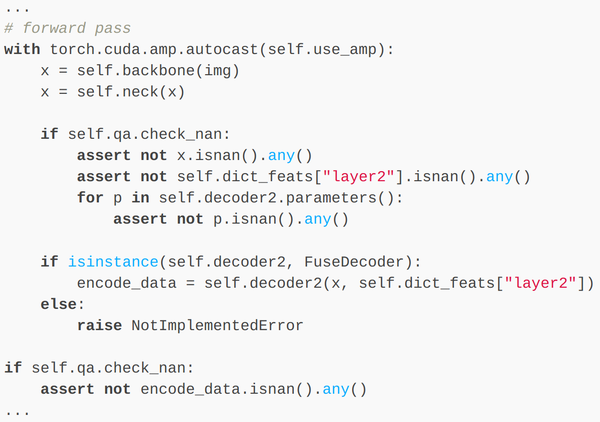

torch.cuda.amp.autocast causes CPU Memory Leak during inference · Issue #2381 · facebookresearch/detectron2 · GitHub

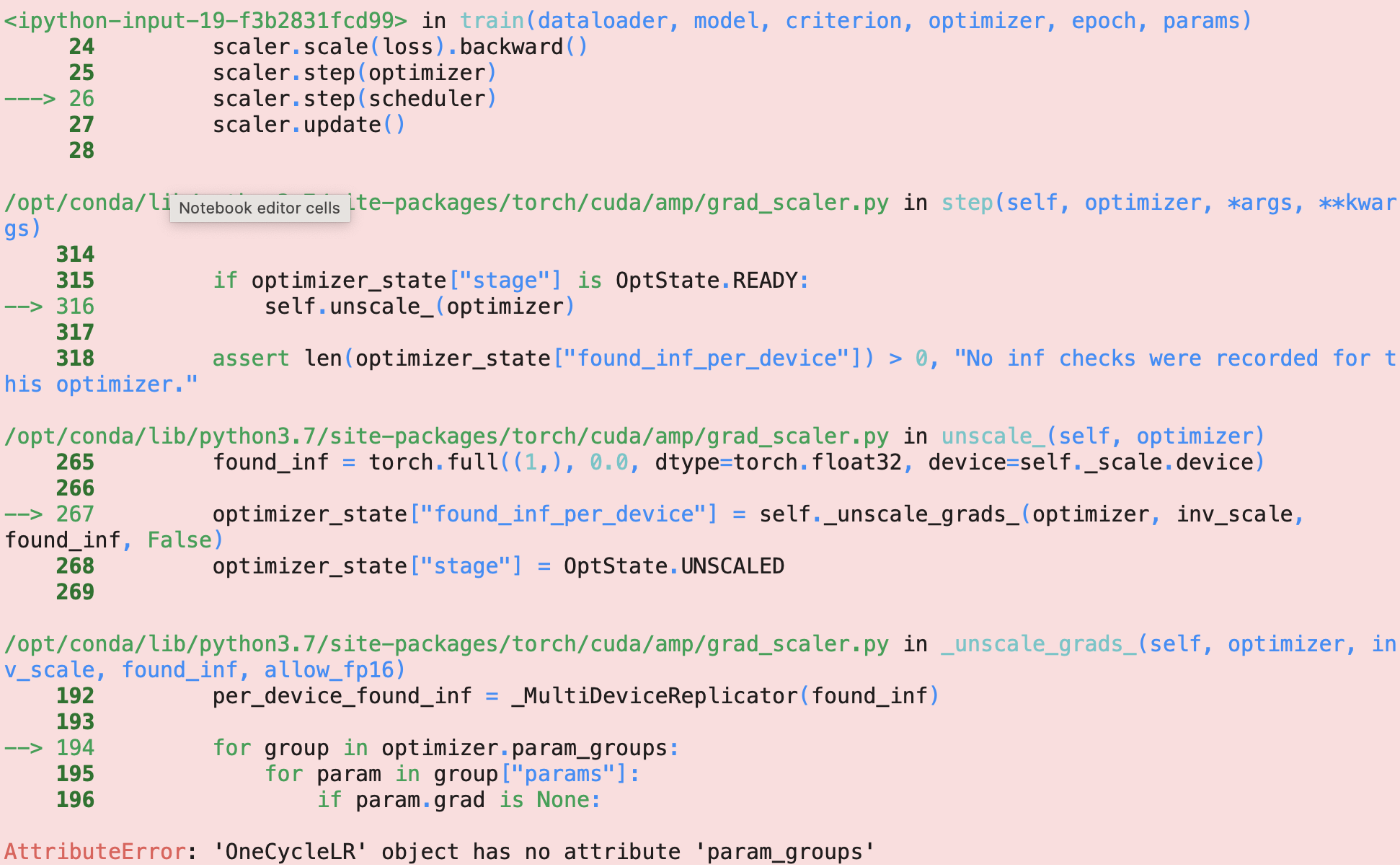

What is the correct way to use mixed-precision training with OneCycleLR - mixed-precision - PyTorch Forums

AttributeError: module 'torch.cuda.amp' has no attribute 'autocast' · Issue #22 · WongKinYiu/ScaledYOLOv4 · GitHub

PyTorch on X: "For torch <= 1.9.1, AMP was limited to CUDA tensors using ` torch.cuda.amp. autocast()` v1.10 onwards, PyTorch has a generic API `torch. autocast()` that automatically casts * CUDA tensors to

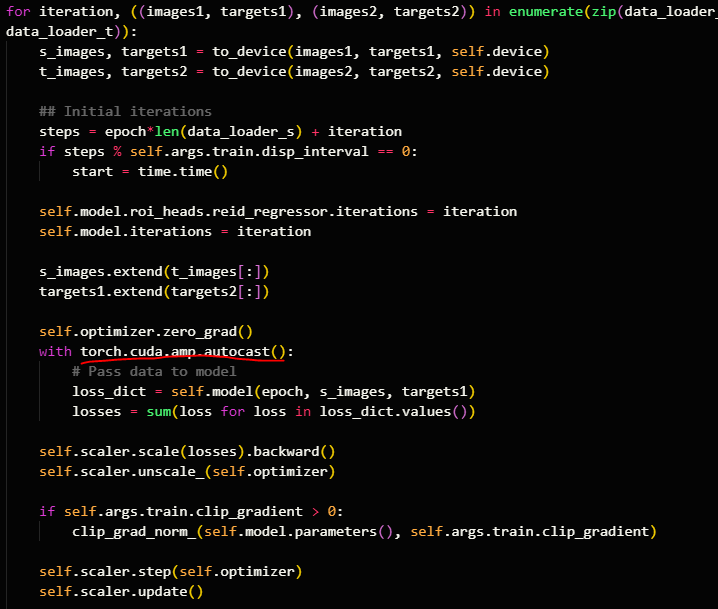

My first training epoch takes about 1 hour where after that every epoch takes about 25 minutes.Im using amp, gradient accum, grad clipping, torch.backends.cudnn.benchmark=True,Adam optimizer,Scheduler with warmup, resnet+arcface.Is putting benchmark ...

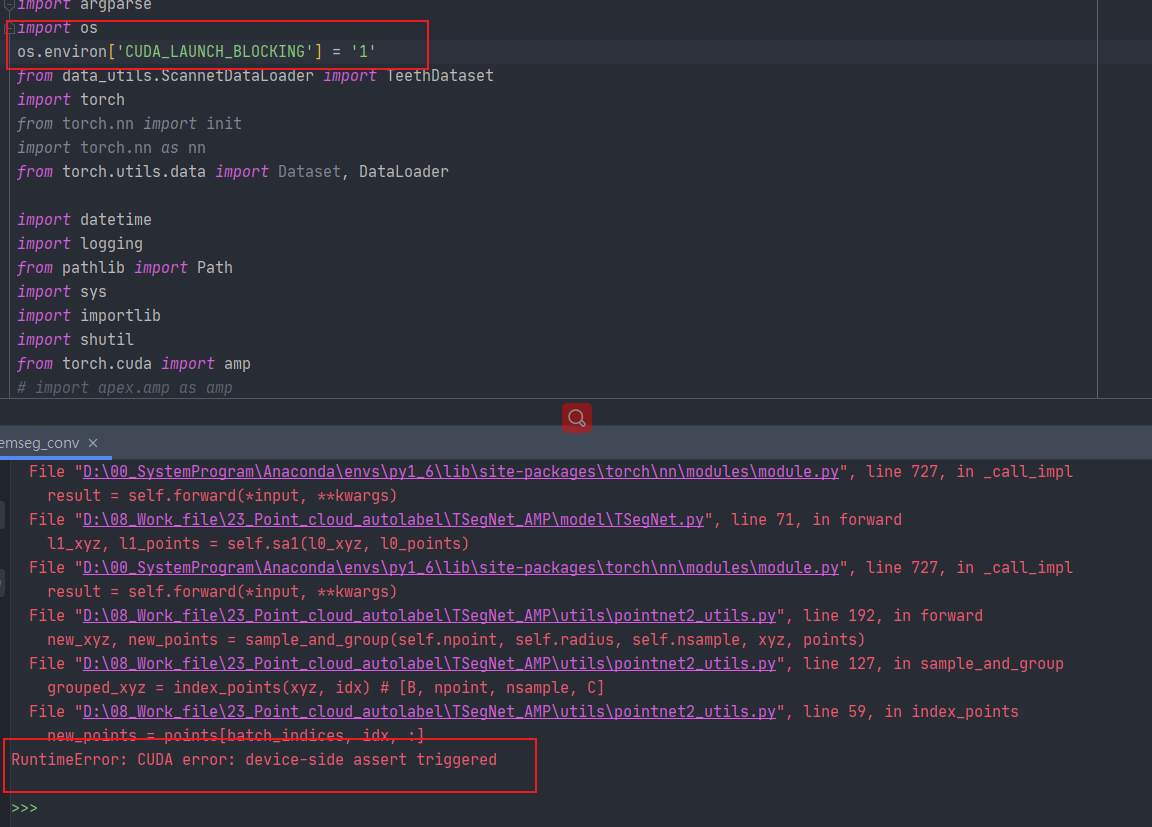

module 'torch' has no attribute 'autocast'不是版本问题_attributeerror: module ' torch' has no attribute 'a-CSDN博客

torch.cuda.amp, example with 20% memory increase compared to apex/amp · Issue #49653 · pytorch/pytorch · GitHub

![pytorch] Mixed Precision 사용 방법 | torch.amp | torch.autocast | 모델 학습 속도를 높이고 메모리를 효율적으로 사용하는 방법 pytorch] Mixed Precision 사용 방법 | torch.amp | torch.autocast | 모델 학습 속도를 높이고 메모리를 효율적으로 사용하는 방법](https://blog.kakaocdn.net/dn/c8agc2/btrT8ZHaguU/5Ht96EPtHn4nMoO95q7Rjk/img.png)