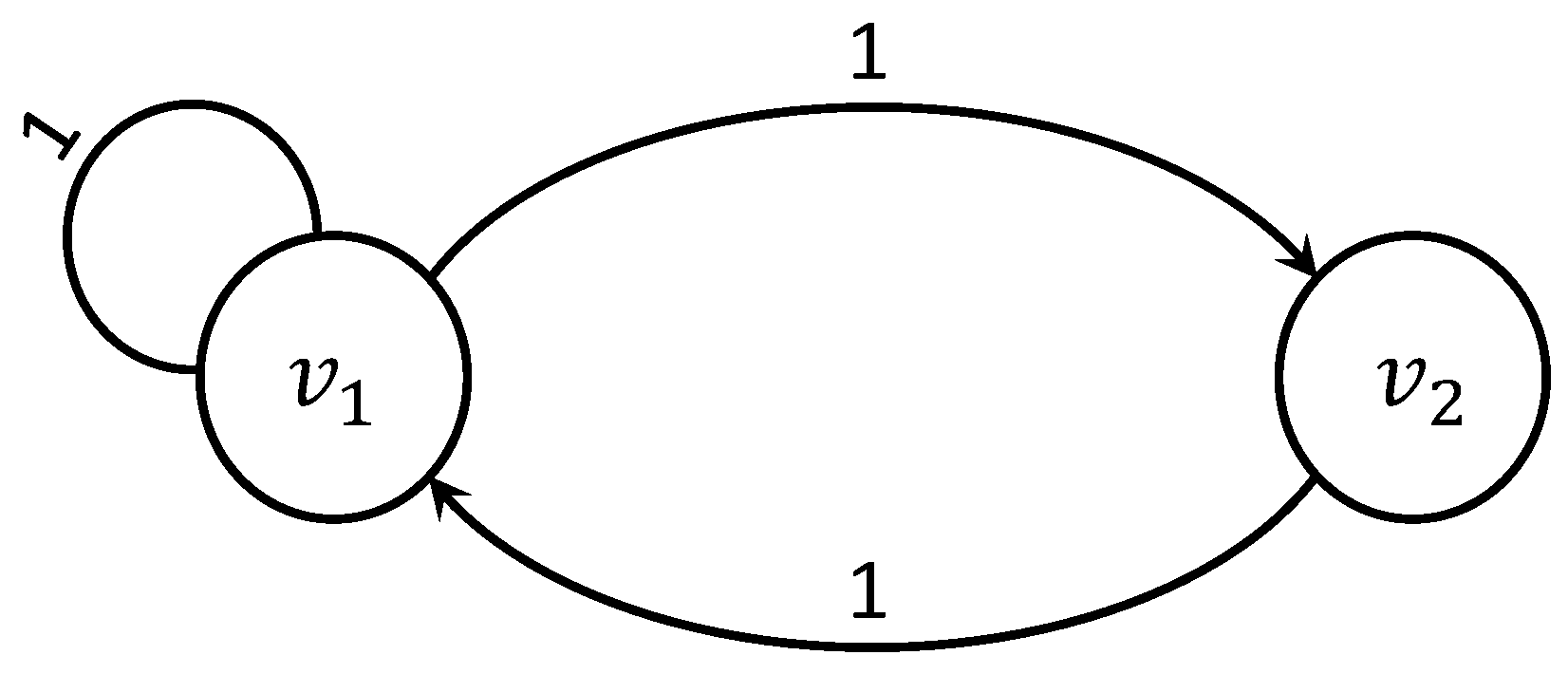

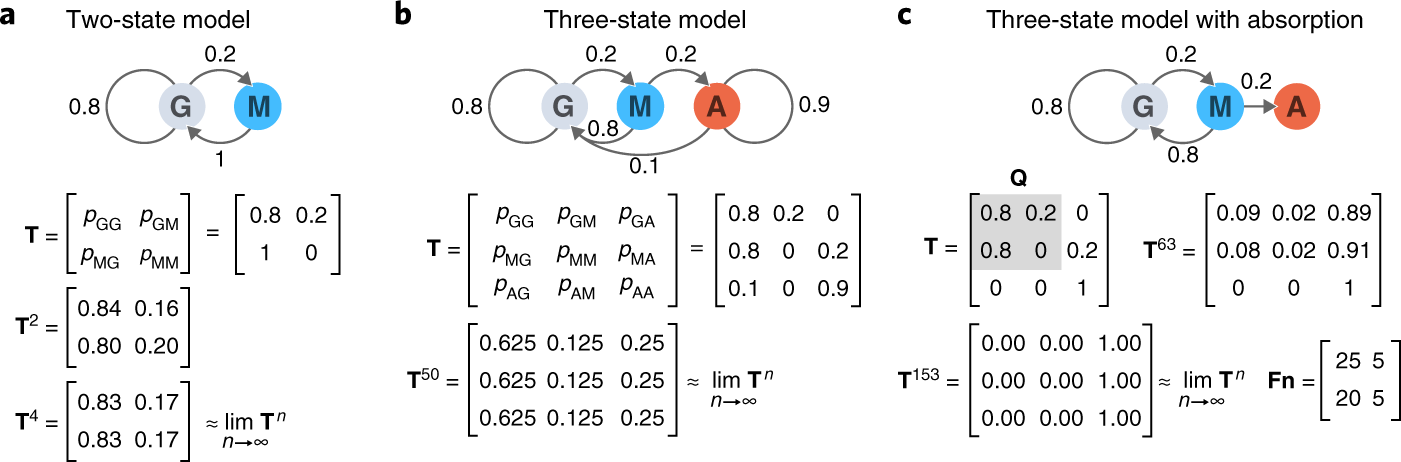

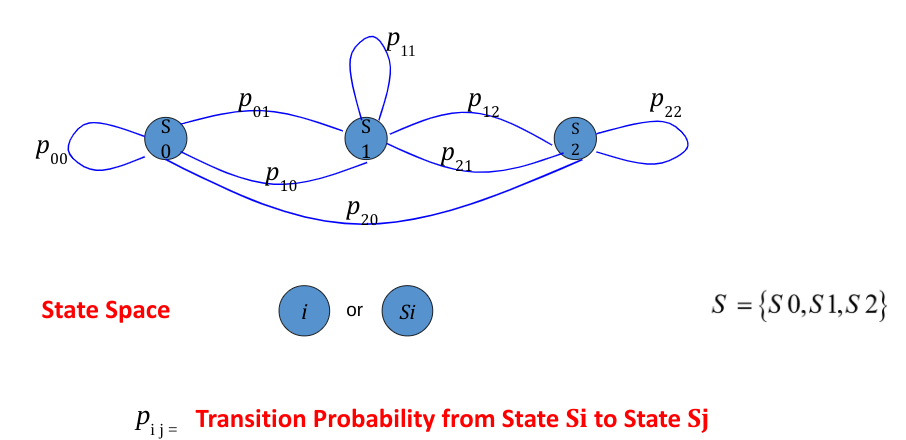

Markov chain of the two-state error model for modeling router's losses. | Download Scientific Diagram

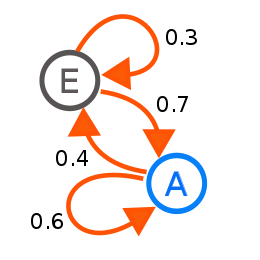

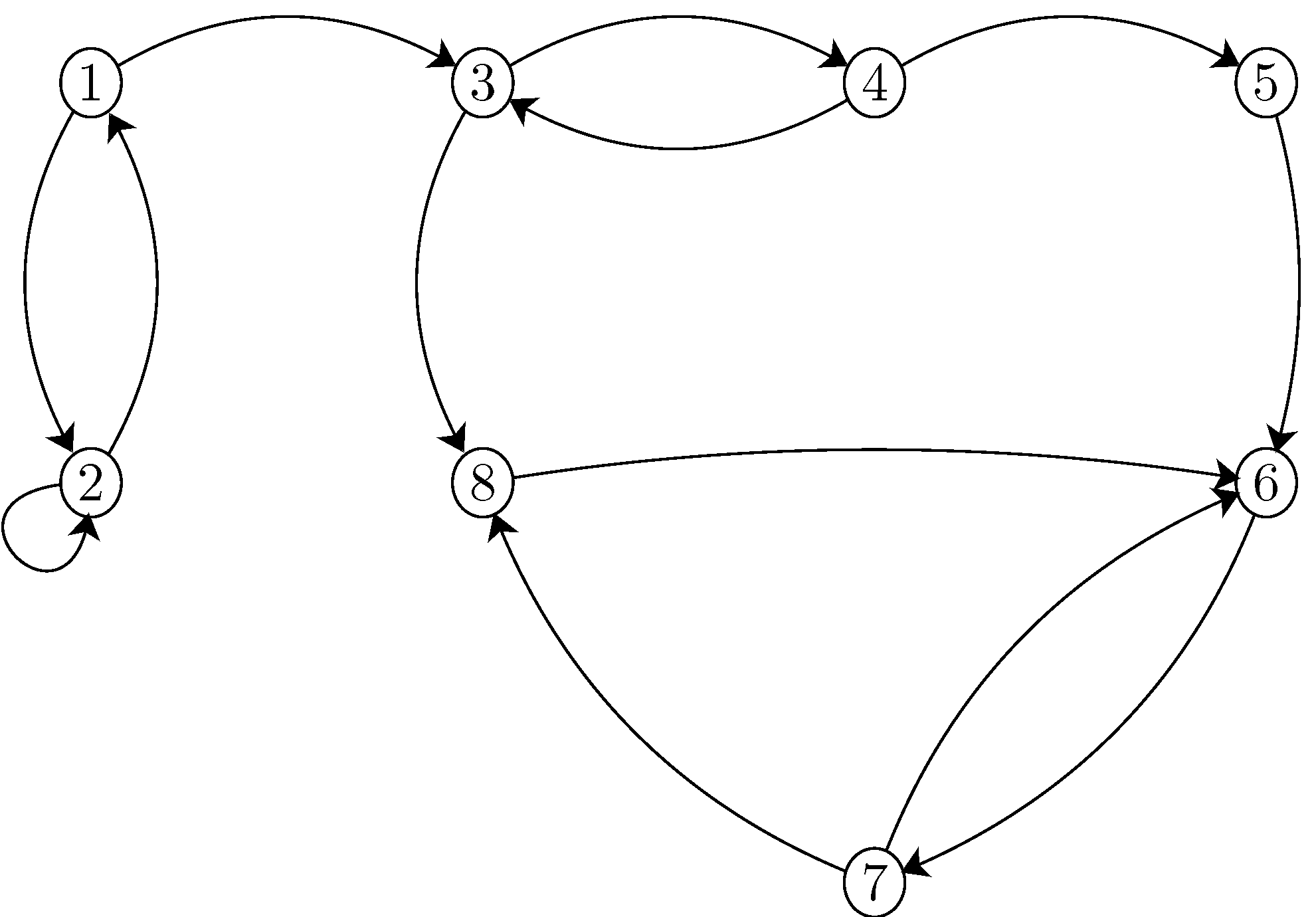

probability - What is the steady state of a Markov chain with two absorbing states? - Mathematics Stack Exchange

![SOLVED: 5 Let the transition probability matrix of a two-state Markov chain be given by d d P] p p Show that F dz) 1)7 H (Zp 1)" (u)d Lz 2 (2p SOLVED: 5 Let the transition probability matrix of a two-state Markov chain be given by d d P] p p Show that F dz) 1)7 H (Zp 1)" (u)d Lz 2 (2p](https://cdn.numerade.com/ask_images/23499774552e4c11a165e647ef718e10.jpg)

SOLVED: 5 Let the transition probability matrix of a two-state Markov chain be given by d d P] p p Show that F dz) 1)7 H (Zp 1)" (u)d Lz 2 (2p

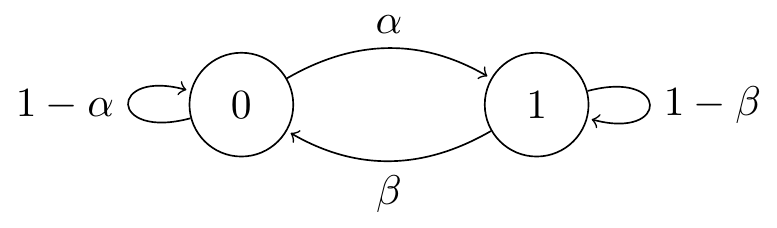

SOLVED: Problem 3. (25 points) Let the transition probability of a two-state Markov chain be given by P = 1 - p p 1 p p For which values of p [0,

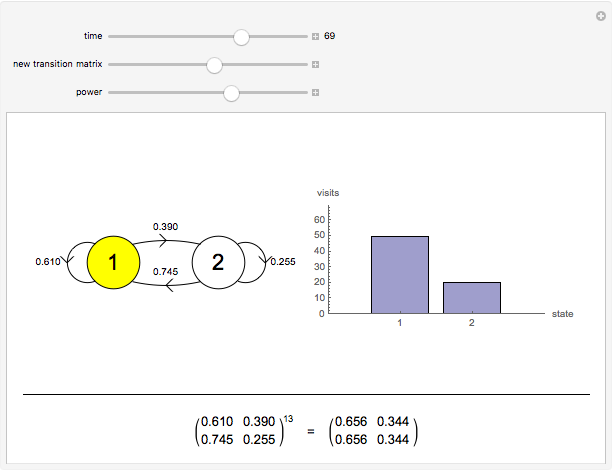

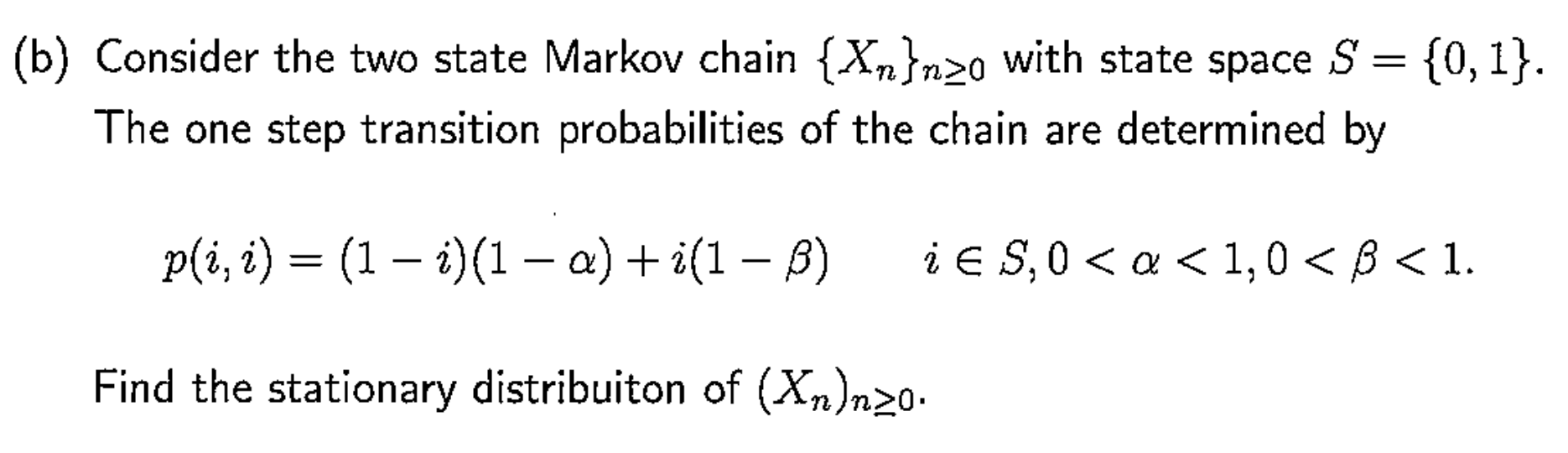

probability - What is the significance of the stationary distribution of a markov chain given it's initial state? - Stack Overflow